What is data science? Learn how to apply it to process automation today!

The Industrial Internet of Things (IIoT) goes beyond collecting and organizing field data. With data science, you can use all those values to create valuable insights. Today, let’s find out how to apply data science to your IIoT solutions.

We’ve talked about collecting field data and using it in cloud solutions such as Netilion. Cloud services provide a huge list of benefits for your applications, but what if you want to dig a bit deeper? You can use data science to forecast values or develop advanced interpretations by pulling from more than one data source.

Today, let’s look at the basics of data science and how you can apply this concept to your plant. It may sound very far away from standard process automation, but with the merging of OT and IT, this knowledge is crucial, so we’re here to share everything with you.

What is data science?

Wikipedia defines it as a “field that uses scientific methods, processes, algorithms and systems to extract knowledge and insights from structured and unstructured data.”

Even if you’ve never heard of it, you’ve probably heard some of the buzzwords emanating from this field – machine learning, deep learning, forecasting, pattern detection, and much more.

They’re more or less the same, just different ways to talk about the same topic: machine learning.

What is machine learning in data science?

Machine learning is a major part of data science and the source of many recent tech advances, from predictions used in high-frequency trading on stock markets to deepfakes that fool your sense of what’s real. We can further classify machine learning, as you see in this table:

*](https://netilion.endress.com/blog/content/images/2021/05/Machine-Learning-1.png)

So a lot of these fields focus on predicting stock prices and the weather or building self-driving cars. What about process automation? Can we apply any of this to our industry? Yes, totally! Check out one compelling use case in this video:

So now we can improve forecasts and compare them to important thresholds, like when a tank needs emptying or filling. So let’s start with the data, in particular where we store it.

Usually, control systems come with a database to store historical data. We could extract from there in a CSV or Excel report. If you’re nice to the IT folks and you have the skills, you could use SQL (structured query language) instead. And now, emerging IIoT technologies offer a new possibility: application programming interfaces (APIs)!

What is an API in data science?

So an API is a tool that can read or write information in a system. The current industry standard is the JSON/RESTful API. It can exchange information via the JSON (JavaScript object notation) format in one of four ways:

- GET: show a target resource’s state

- POST: process a request in the target resource

- PUT or PATCH: set the target resource to the state defined in a request

- DELETE: delete the target resource’s state

These four methods equal CRUD – create, read, update and delete.

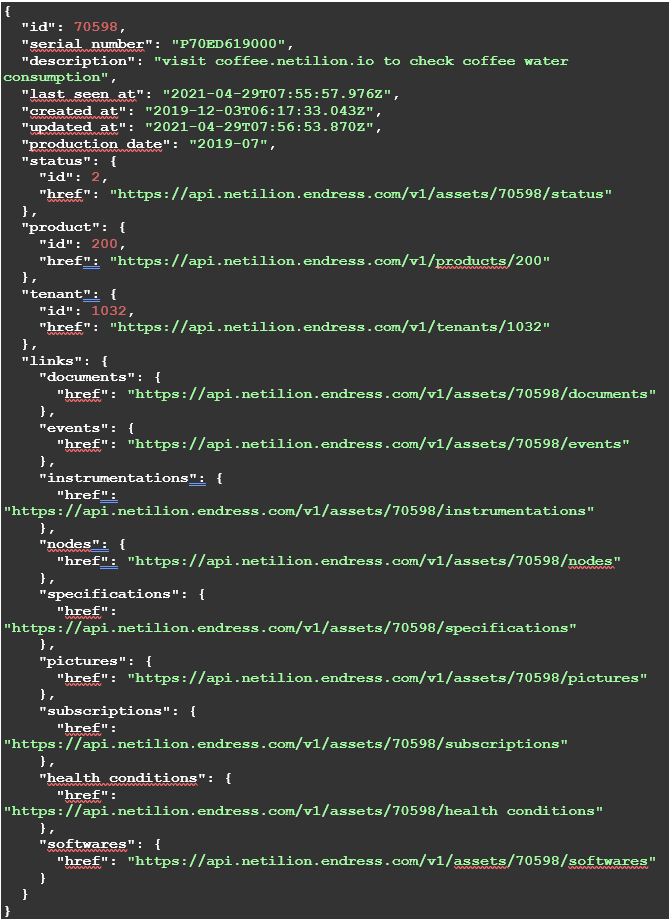

You can access these methods on the API server via HTTP(s), which we all know from surfing the internet. But instead of getting a pretty webpage result like you’d see on Google Chrome, you’ll get a JSON object:

It’s okay! In data science, we usually focus on the “GET” method, as it allows us to pull data from an API in the JSON format, then convert it to any format we want, such as a Python Pandas Dataframe object.

You can learn more about APIs in our article “What should I know about APIs in process automation?” if you like.

How can I use data science in process automation?

Glad you asked! I made a video to dive into the details of how to use data science in process automation:

So data science is definitely relevant for process automation. IIoT ecosystems, such as Netilion, can help you get projects off the ground. Data scientists can focus on the science part of their work and leave the collection, storage, and retrieval to the IIoT ecosystem, from prototype to production!

By the way, if your data scientists prefer databases and SQL instead of API calls, that’s fine. You can make a Netilion Webhook send all the data to your own application that then saves to a database. So if that’s what you need, Netilion Connect has you covered.

Relevant links:

- Link to Github Repository: https://github.com/pcps-afa/datascience_netilion

- Using Pickle to make your model portable & host that model in Heroku: https://medium.com/analytics-vidhya/deploying-a-machine-learning-model-as-a-flask-app-on-heroku-part-1-b5e194fed16d

- Video on how to set up your Netilion Connect subscription with Webhooks: https://www.youtube.com/watch?v=K3wJfXcD9g4&t=1s

- Further reading on Netilion Webhooks: https://developer.netilion.endress.com/support/webhooks

We hope that you enjoyed this blog post and look forward to the next!

Cheers!